Here’s How to Try Apple’s New AI Image Editor

There’s a new Apple image editor, if you know where to look. The iPhone kings teamed up with researchers at the University of California at Santa Barbara to build a tool that lets you edit photos and images with text-based instructions. It doesn’t have an official release, but the researchers are hosting a demo you can try for yourself, first spotted by Extreme Tech.

What Could the Future of Medical AI Look Like?

The project is called Multimodal Large Language Model Guided Image Editing (MGIE). There are a lot of AI image editors on the market right now. Photoshop now comes with AI tools built in, and others such as OpenAI’s DALL-E let you edit images in addition to generating them out of whole cloth. If you’ve ever tried to use them, however, you know it can be a little frustrating. In many cases, the AI has a hard time understanding exactly what you’re looking for.

The innovation with MGIE is adding another layer of AI interpretation. When you tell the AI what you want to see, MGIE first uses a text-based AI to make your instructions more explicit and descriptive. “Experimental results demonstrate that expressive instructions are crucial to instruction-based image editing,” the researchers said in a paper published on arXiv. “Our MGIE can lead to a notable improvement.”

Apple published an open-source version of the software on GitHub. If you’re savvy you can get a version of MGIE running on your own, but the researchers set up the tool on Hugging Face. It runs a little slow when there are a lot of people using it, but it’s a fun experiment.

Gigantic tech companies like Apple spend billions of dollars on projects that no one ever gets to see, so it’s entirely possible this so-called MGIE tool will never get an official release. Apple didn’t immediately respond to a request for comment.

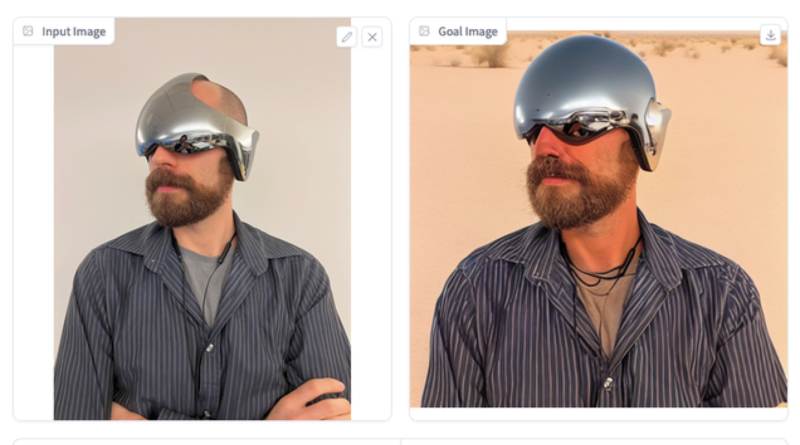

We took it for a spin ourselves here at the Gizmodo office. I uploaded a picture of my colleague and closest advisor Kyle Barr wearing a strange pair of sunglasses he picked up at a Netflix at this year’s Consumer Electronics Show. I told the AI “the man is standing in the desert.” Before generating the image, the MGIE tool extrapolated:

“The man is wearing a metal helmet and standing in a desert setting.The environment around him is arid and barren, with sand dunes stretching as far as the eye can see.”

After playing around with the tool for far longer than we should have, it’s clearly subject to a lot of the same limitations as any other AI image generator. A lot of the time, the results are bizarre and nothing like what you asked for. But in some cases, it did an impressive job, and in defense of the program, AI does better with familiar subjects. “Familiar” is not something you would call Kyle’s sunglasses.